Replicating Chess.com Analysis locally: My Upwork Gig

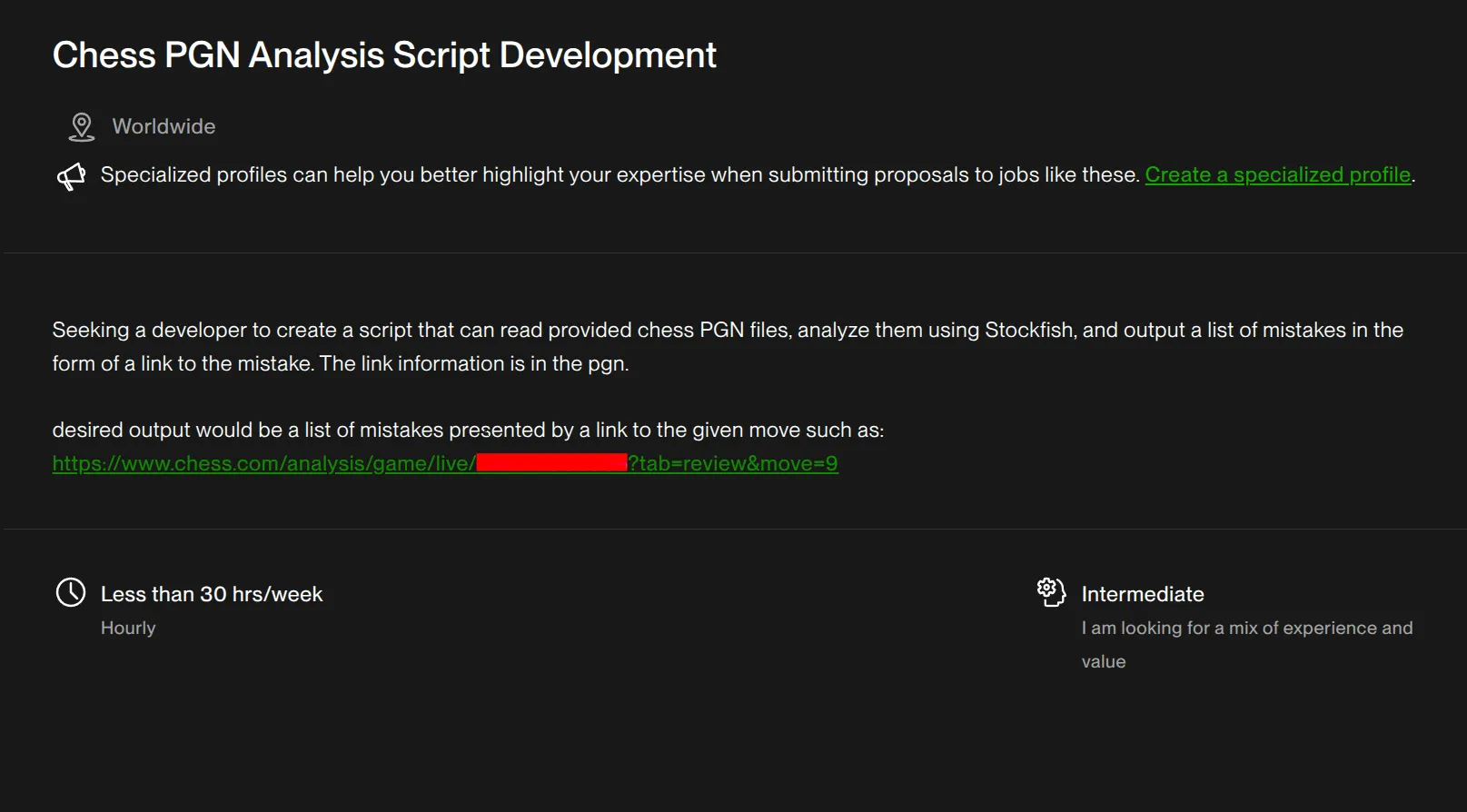

Like many developers, I sometimes find myself scrolling through Upwork, looking for interesting technical challenges. One particular post caught my eye: someone wanted to replicate the core idea of Chess.com’s game analysis, but run it locally. The goal was to analyze their past games and pinpoint those “what was I thinking?” moments for later study.

While the project didn’t move forward due to budget constraints (a common Upwork tale!), the technical puzzle was intriguing. So, I decided to build it anyway. At least I got a blog post out of it ;)

You might have already known about Chess.com, but for the uninitiated, it’s a popular online chess platform where you can play chess with people around the world. One of the features they provide is game analysis. After you finish a game, you can analyze it to see how well you played, and where you could have improved. It has free & paid tiers, and obviously, the paid tier has better analysis quality.

Chess.com uses Stockfish engine for analysis. Stockfish is an open-source chess engine, that can evaluate chess positions and play as an opponent. One of the strongest chess engines out there. More than half of engine competitions are won by Stockfish!!.

Okay, enough chit chat, let’s dive in.

The Task

Client wanted to locally analyze their past games and list out bad moves, so they can study them later.

Some Background

Before we start coding, let’s cover a few essential concepts:

- Past game are represented in PGN format. (Lots of PGN parsers are available open-source). One PGN file can contain multiple games.

- Stockfish Engine analyses the game by traversing the game tree, We can control how deep it goes, and how many moves ahead it looks. It’s controlled by a parameter called

depth. Higher depth means more thorough (and accurate) analysis, but it also takes significantly longer time. - Stockfish gives a score for both players as they make their move. The higher the score, the better the position for that player.

- Stockfish can also tell us the best move (or) top-k best moves for a given board.

Setup

I’m picking python-chess (renamed to chess) package for this task. It handles PGN parsing beautifully and provides a clean way to interact with UCI-compatible engines like Stockfish. Here’s the initial setup to load a PGN file and configure the Stockfish engine:

import chess

import chess.pgn

import chess.engine

from chess.pgn import Game

import multiprocessing

import psutil

STOCKFISH_PATH = "/opt/stockfish/stockfish-ubuntu-x86-64"

PGN_PATH = "Giri, Anish_vs_Van Buitenen, Herbert_2005.10.20.pgn"

DEPTH = 18

def analyze_game(game: Game):

global STOCKFISH_PATH, DEPTH

print(f'{game.headers["White"]} ⚔️ {game.headers["Black"]}')

engine = chess.engine.SimpleEngine.popen_uci(STOCKFISH_PATH)

available_memory = int(

# Use 80% of available memory

((psutil.virtual_memory().available * 0.8) // (2**30))

* 1024

)

engine.configure(

{

"Threads": max(1, multiprocessing.cpu_count() - 1),

"UCI_LimitStrength": False,

"UCI_Elo": 3000,

"Skill Level": 20, # Max strength

"Hash": available_memory,

}

)

engine.quit()

with open(PGN_PATH) as f:

while True:

game = chess.pgn.read_game(f)

if game is None:

break

analyze_game(game)This code sets up the Stockfish engine, configures it to use available resources (CPU cores, memory), and loops through games in a PGN file. Now, the crucial part: how do we use this engine to actually identify the bad moves?

Detecting Bad Moves (The First Attempt)

My initial thought was straightforward:

- Look at the board before a player makes a move. Get the Stockfish score.

- Look at the board after the player makes the move. Get the new Stockfish score.

- If the score dropped for the player who moved, that move was probably bad.

To keep things simple, I started by focusing only on White’s moves.

moves = list(game.mainline_moves())

for index, move in enumerate(moves):

if board.turn == chess.BLACK:

# We’re analyzing only white moves

board.push(move)

continue

# 1. Get the score before the move

info_before_move = engine.analyse(board, chess.engine.Limit(depth=DEPTH))

score_before_move = (

info_before_move["score"].white().score(mate_score=10000) / 100.0

)

# Make the move

board.push(move)

# 2. Get the score after the move

info_after_move = engine.analyse(board, chess.engine.Limit(depth=DEPTH))

score_after_move = (

info_after_move["score"].white().score(mate_score=10000) / 100.0

)

# 3. Check if the score dropped

if score_after_move < score_before_move:

print(

f"Move {(index // 2) + 1} is probably bad: {move}, Diff: {(score_after_move - score_before_move):.2f}"

)I ran this on a game from Lichess, The initial output was… noisy:

Bako80 ⚔️ bdhayes

Move 1 is probably bad: d2d4, Diff from previous move: -0.13

Move 3 is probably bad: c2c4, Diff from previous move: -0.01

Move 4 is probably bad: b1c3, Diff from previous move: -0.47

Move 5 is probably bad: e2e4, Diff from previous move: -0.14

Move 6 is probably bad: a2a3, Diff from previous move: -1.21

Move 7 is probably bad: d4d5, Diff from previous move: -0.92

Move 8 is probably bad: f1e2, Diff from previous move: -0.05

Move 9 is probably bad: c1e3, Diff from previous move: -1.06

Move 11 is probably bad: d5d6, Diff from previous move: -1.43

Move 12 is probably bad: d6d7, Diff from previous move: -0.70

Move 13 is probably bad: d1c2, Diff from previous move: -0.15

Move 14 is probably bad: e1g1, Diff from previous move: -0.06

Move 15 is probably bad: b2b4, Diff from previous move: -0.66

Move 16 is probably bad: a1d1, Diff from previous move: -0.29

Move 17 is probably bad: d1d2, Diff from previous move: -0.67

Move 18 is probably bad: f1d1, Diff from previous move: -3.60

Move 19 is probably bad: a3b4, Diff from previous move: -0.49

Move 20 is probably bad: c3d5, Diff from previous move: -0.80

Move 21 is probably bad: c2c3, Diff from previous move: -0.32

Move 22 is probably bad: d5e7, Diff from previous move: -1.88

Move 23 is probably bad: d2d6, Diff from previous move: -0.06

Move 24 is probably bad: c3c2, Diff from previous move: -0.37

Move 25 is probably bad: f3h4, Diff from previous move: -0.42

Move 26 is probably bad: g2g3, Diff from previous move: -0.54

Move 28 is probably bad: d7b7, Diff from previous move: -0.12

Move 29 is probably bad: g1g2, Diff from previous move: -0.15

Move 30 is probably bad: c2d2, Diff from previous move: -9.76

Move 32 is probably bad: d2c2, Diff from previous move: -0.09That’s… a lot of bad moves. Many flagged moves only caused tiny dips in the score. Let’s introduce a threshold (e.g., only flag moves where the score drops by more than 0.5 pawns). Running it again yields a more reasonable list:

diff = score_after_move - score_before_move

if diff < -0.5: # <==== Threshold = -0.5

print(

f"Move {(index // 2) + 1} is probably bad: {move}, Diff: {diff:.2f}"

)Bako80 ⚔️ bdhayes

Move 4 is probably bad: b1c3, Diff from previous move: -0.75

Move 6 is probably bad: a2a3, Diff from previous move: -1.14

Move 7 is probably bad: d4d5, Diff from previous move: -0.93

Move 9 is probably bad: c1e3, Diff from previous move: -0.80

Move 11 is probably bad: d5d6, Diff from previous move: -1.56

Move 12 is probably bad: d6d7, Diff from previous move: -0.76

Move 15 is probably bad: b2b4, Diff from previous move: -0.52

Move 17 is probably bad: d1d2, Diff from previous move: -0.55

Move 18 is probably bad: f1d1, Diff from previous move: -3.15

Move 22 is probably bad: d5e7, Diff from previous move: -2.11

Move 26 is probably bad: g2g3, Diff from previous move: -0.80

Move 30 is probably bad: c2d2, Diff from previous move: -8.94Much better! This list looks more realistic, and several moves align with Lichess’s own analysis flags (Note: Stockfish analysis isn’t always perfectly deterministic, especially with multiple threads, so scores might vary slightly run-to-run, but the overall picture should be consistent).

So, problem solved? Time to wrap up?

Not quite. There’s a subtle flaw in this approach.

Flaw: When Scores Lie

Consider this scenario: You make a terrible blunder, perhaps losing your queen for a pawn. Your position is now objectively awful. Stockfish’s evaluation plummets. Now, on your next turn, even if you find the best possible move in that dreadful situation (a move that minimizes the damage), the evaluation might still drop slightly compared to the already-bad position before your move.

Our current script would flag this best-possible-move-in-a-bad-spot as “bad” simply because the score went down. But that doesn’t seem right. Playing the best move available, even when losing, isn’t a mistake in itself. Right?

Let’s look at our example game again, specifically Move 26 (g2g3). Our script flagged it because the score dropped. But how much worse was it than the best possible move in that position?

To find out, we need to:

- Analyze the position before the move to find the best move Stockfish recommends and the score after that best move.

- Compare the score after the actual move played to the score after the best move.

Here’s the code incorporating that check:

score_before_move = (

info_before_move[0]["score"].white().score(mate_score=10000) / 100.0

)

best_move = info_before_move[0]["pv"][0]

board.push(best_move)

info_after_best_move = engine.analyse(board, chess.engine.Limit(depth=DEPTH))

score_after_best_move = (

info_after_best_move["score"].white().score(mate_score=10000) / 100.0

)

board.pop() # Undo the best move

# Now push the actual move

board.push(move)

info_after_move = engine.analyse(board, chess.engine.Limit(depth=DEPTH))

score_after_move = (

info_after_move["score"].white().score(mate_score=10000) / 100.0

)

diff = score_after_move - score_before_move

if diff < -0.5:

print(

f"Move {(index // 2) + 1} is probably bad: {move}, Diff from previous move: {diff:.2f}, Diff from best move: {(score_after_best_move - score_after_move):.2f}",

"Best move:",

best_move,

)And the output now includes the comparison to the best move:

Bako80 ⚔️ bdhayes

Move 4 is probably bad: b1c3, Diff from previous move: -0.68, Diff from best move: 0.57 Best move: e2e4

Move 6 is probably bad: a2a3, Diff from previous move: -1.39, Diff from best move: 1.36 Best move: a2a4

Move 7 is probably bad: d4d5, Diff from previous move: -0.83, Diff from best move: 0.83 Best move: a3a4

Move 9 is probably bad: c1e3, Diff from previous move: -1.08, Diff from best move: 1.12 Best move: b2b4

Move 11 is probably bad: d5d6, Diff from previous move: -1.58, Diff from best move: 1.51 Best move: d5c6

Move 12 is probably bad: d6d7, Diff from previous move: -0.80, Diff from best move: 0.63 Best move: b2b3

Move 15 is probably bad: b2b4, Diff from previous move: -0.60, Diff from best move: 0.55 Best move: a3a4

Move 18 is probably bad: f1d1, Diff from previous move: -3.12, Diff from best move: 3.57 Best move: c3d5

Move 22 is probably bad: d5e7, Diff from previous move: -1.69, Diff from best move: 1.58 Best move: h2h4

Move 24 is probably bad: c3c2, Diff from previous move: -0.52, Diff from best move: 0.41 Best move: c3c2

Move 26 is probably bad: g2g3, Diff from previous move: -0.71, Diff from best move: 0.27 Best move: e2c4

Move 30 is probably bad: c2d2, Diff from previous move: -9.95, Diff from best move: 13.41 Best move: e2c4Look closely at Move 26 (g2g3) again. The score dropped by 0.71 compared to the previous position (Diff from previous move: -0.71). However, the score difference between playing g2g3 and playing the best move (e2c4) is only 0.27 (Diff from best move: 0.27). This tells us g2g3 wasn’t optimal, but it was much closer to the best move than the initial score drop suggested. It certainly wasn’t a major blunder.

This reveals the better approach: instead of comparing the score after the move to the score before it, we should compare the score after the actual move to the score after the best possible move. This difference truly quantifies how much potential advantage was lost by not playing the optimal move.

Wrapping Up

With this improved logic in place, I cleaned up the script, added proper support for both White and Black moves. I also redirected the output to a file instead of printing it to stdout.

Additionally, if the PGN file comes from Chess.com, the script logs a clickable URL for each move, making it easy to jump straight to that point in the game!

You can find the complete, final version of the script on GitHub: https://github.com/CITIZENDOT/AnalysePGN

Output from the final script looks like this:

Game 1: DanielNaroditsky ⚔️ LPSupi: Bad moves

Link: https://www.chess.com/game/live/139321870401?username=danielnaroditsky

--------------------------------------------------

Move 21 : (d5b4)

URL: https://www.chess.com/analysis/game/live/139321870401?username=danielnaroditsky?tab=analysis&move=40

Move 24 : (f5g4)

URL: https://www.chess.com/analysis/game/live/139321870401?username=danielnaroditsky?tab=analysis&move=46

Move 27 : (d4d3)

URL: https://www.chess.com/analysis/game/live/139321870401?username=danielnaroditsky?tab=analysis&move=52

==================================================✨ Interestingly, at the time of writing this blog, I discovered that Chess.com has moved its game analysis feature entirely behind its paid membership.

This makes having a local tool even more potentially useful! Hopefully, this analyzer can be a valuable resource for chess players looking to review their games without relying solely on paid online platforms.